Exposed: Critical AI Security Risks That Threaten Your Business Data

Did you know that 85% of AI projects fail to meet their objectives, with poor data quality being the primary culprit?

As CEO of CyberMedics, I’ve witnessed firsthand how AI security risks threaten business data across industries, leading to devastating consequences. According to recent studies, the average cost of a data breach has reached an all-time high of $4.88 million, while organizations take approximately 277 days to identify and contain these breaches.

The human element remains a critical factor, contributing to 74% of breaches through errors or privilege misuse. Additionally, with 85% of security professionals attributing the rise in cyberattacks to generative AI, protecting your business data has never been more crucial. In fact, in 2025, privacy regulations will cover the personal data of 75% of the world’s population, making it essential for organizations to understand and address these emerging threats.

Building Strong AI Security Foundations

At CyberMedics, securing AI systems begins with understanding their unique architecture and risk landscape. Recent data shows that adversarial attacks and data poisoning have become major sources of concern for AI implementations. Consequently, building robust security foundations requires a comprehensive approach that addresses both technical and operational aspects.

Security Architecture Basics

A well-designed AI security architecture demands multi-layered defenses. Specifically, organizations must implement strict data governance policies that control sensitive information within AI responses. Furthermore, our experience at CyberMedics has shown that secure AI architecture requires three essential components:

- Input validation and sanitization to prevent adversarial attacks

- Output filtering and moderation to block harmful responses

- Continuous monitoring systems for detecting anomalies

Moreover, AI security gateways play a crucial role in protecting training traffic and ensuring it originates from authorized sources. These gateways also analyze frameworks and libraries to validate that teams use datasets appropriately for inducing specific model behaviors.

Risk Assessment Framework

The NIST AI Risk Management Framework (AI RMF) provides a structured approach for identifying and managing AI-specific risks. Notably, this framework addresses both current and emerging risks, particularly important since some AI risks remain challenging to measure quantitatively.

Organizations must recognize that risk can emerge from third-party data, software, or hardware, as well as how these components are used. Therefore, all parties involved in AI development and deployment should actively manage risks in their systems, whether standalone or integrated.

The framework emphasizes that effective risk management requires organizational commitment at senior levels. At CyberMedics, we’ve observed that successful AI security implementation often demands cultural change within organizations. Particularly, teams must adopt a prevention-centric approach rather than solely focusing on threat detection.

Regular audits serve as a cornerstone of this framework, helping ensure AI models operate as expected without malicious influence. These audits, coupled with a well-structured governance framework, enforce essential security controls around AI usage within organizations.

Data Protection Strategies

Protecting sensitive data in AI systems demands a multi-layered approach. At CyberMedics, we’ve observed that implementing robust data protection strategies significantly reduces the risk of breaches, which currently average $4.88 million in costs. (https://www.ibm.com/think/insights/ai-data-security)

Encryption Methods

End-to-end encryption stands as the cornerstone of AI data protection, essentially transforming sensitive information into unreadable formats. Through our work with healthcare and government sectors, we’ve identified that standardizing data formats across different types, primarily through optimal encryption and anonymization strategies, simplifies data exchange and governance.

Our recommended encryption approach includes:

- Data lifecycle encryption for model development and inference

- AI model file encryption

- Edge device protection for vehicles, sensors, and medical devices

- Quantum-resistant encryption protocols

Access Control Systems

Implementing a zero-trust approach undoubtedly strengthens AI platform security. At CyberMedics, we emphasize that when AI systems work on behalf of users, they should access only the data that users have permission to view. Subsequently, this requires identity governance programs that control system access, data usage, and output accessibility.

Fine-grained access controls play a vital role in preventing unauthorized data exposure. These controls must comply with regulations like GDPR, HIPAA, and CCPA. Through our experience in operational technology security, we’ve found that regular audits of access patterns help identify potential security risks before they materialize.

Data Handling Protocols

Data handling protocols begin with thorough identification and classification of information. Organizations must store data securely using encryption while regularly auditing and updating security protocols. Principally, we recommend collecting only necessary data to reduce privacy risks and storage costs.

Data sanitization remains critical during AI development to prevent sensitive information exposure. Nevertheless, maintaining data quality is equally important – using clean, relevant, and up-to-date data helps avoid biases and inaccuracies. Through our work across industries, we’ve seen that addressing biases in data and models used helps prevent perpetuating existing inequalities.

For third-party vendor management, carefully review terms and conditions, choosing only trusted data suppliers with robust security infrastructure. Additionally, implement solutions like threat detection systems alongside comprehensive incident response plans for a holistic AI risk mitigation strategy.

AI Model Security Best Practices

Security breaches targeting AI models have surged dramatically, with recent studies showing that only 10% of organizations have reliable systems to measure bias and privacy risks in large language models. Protecting AI models requires a comprehensive approach focusing on both training data and ongoing monitoring.

Training Data Protection

Securing training data stands as a foundational element in AI model security. Initially, organizations must implement strict data curation and validation processes to prevent data poisoning attacks. At CyberMedics, we’ve found that maintaining detailed audit trails of data movement helps track and secure sensitive information effectively.

Secure Development Pipelines play a crucial role in protecting AI models. Although virtual machines and containers provide isolation from the rest of IT infrastructure, organizations must simultaneously implement robust encryption for training data at rest and in transit.

Our work with government agencies has shown that data minimization primarily reduces exposure risks. Indeed, collecting and utilizing only necessary data for specific AI applications not only accelerates training operations but also minimizes potential data loss during breaches.

Model Monitoring Tools

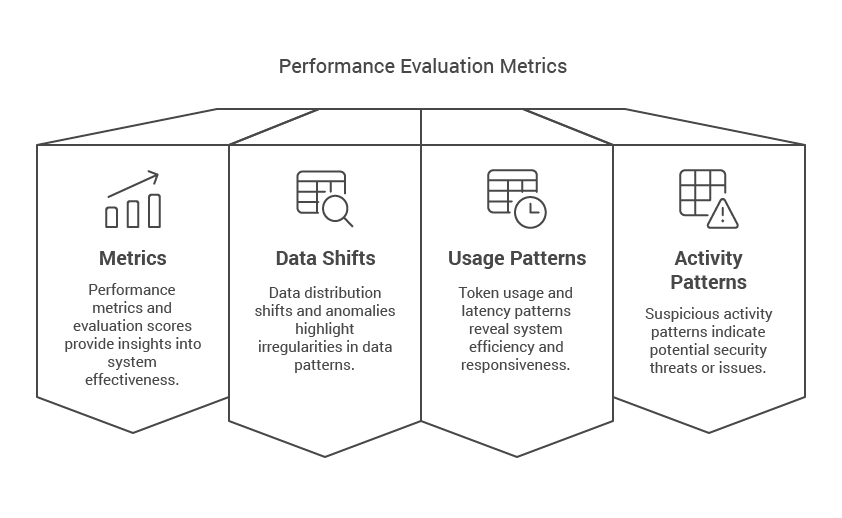

Real-time monitoring emerges as a critical defense against AI security threats. Based on our experience across industries, effective model monitoring should track:

- Performance metrics and evaluation scores

- Data distribution shifts and anomalies

- Token usage and latency patterns

- Suspicious activity patterns

Automated Alert Systems have proven invaluable in our client implementations. Generally, these systems trigger notifications when monitoring metrics cross predetermined thresholds. Through CyberMedics’ partnerships with financial institutions, we’ve seen how automated monitoring can detect and prevent potential security breaches before they escalate.

Proactive monitoring remains essential for maintaining model integrity. Overall, organizations should focus on detecting drift over time by setting baselines using training data. Soon after deployment, teams must establish short-term drift detection mechanisms using historical production data to identify immediate concerns.

At CyberMedics, we emphasize the importance of embedding analysis in monitoring strategies. This approach helps teams proactively identify when unstructured data begins drifting from expected patterns. Our monitoring solutions integrate with many widely used alerting tools, ensuring rapid response to potential security threats across different environments.

Implementing AI Privacy Controls

Privacy stands as the cornerstone of responsible AI development, with recent studies showing that AI technology requires astronomical amounts of data to function effectively.

Privacy by Design Approach

Privacy by design (PbD) represents a fundamental shift in how organizations approach data protection. Primarily, this framework demands embedding privacy considerations into products and services from inception. At CyberMedics, we emphasize collecting only necessary data with clearly defined purposes, ensuring privacy isn’t merely an afterthought.

Key privacy principles that guide our implementations include:

- Proactive risk prevention instead of reactive measures

- Privacy as the default setting

- End-to-end security throughout the data lifecycle

- User-centric controls and transparency

Compliance Checklist

In light of evolving regulations, organizations must maintain strict compliance standards. The GDPR, besides introducing privacy by design in Article 22, embeds ethics into law within the EEA. Through our work at CyberMedics, we’ve developed comprehensive compliance protocols that address:

Data Collection and Purpose: Collect only data absolutely necessary for specific purposes. Organizations must clearly define data collection objectives for targeted advertising, product development, or fraud prevention.

User Rights and Control: Implement accessible mechanisms for users to request data deletion or opt-out of certain processing activities. Chiefly, this includes providing clear explanations about how information will be used.

Technical Measures: Deploy privacy-enhancing technologies (PETs) that protect personal information. In similar fashion to our healthcare sector implementations, these measures should include de-identification, differential privacy, and federated learning techniques.

Regular Audit Process

Regular audits serve as the backbone of effective privacy control. Comparatively, our experience shows that organizations taking a structured approach to auditing demonstrate stronger privacy protection outcomes. The audit process should focus on:

Continuous Monitoring: Track AI system performance, data quality, and decision-making patterns. First thing to remember is establishing baseline metrics using training data for detecting immediate concerns.

Risk Assessment: Evaluate potential privacy vulnerabilities, including unauthorized access and data breaches. Through CyberMedics’ partnerships, we’ve seen how automated monitoring can detect privacy issues before they escalate.

Documentation and Reporting: Maintain comprehensive records of monitoring activities, audit findings, and corrective actions. These records serve as evidence of privacy commitment and can be presented to supervisory authorities when required.

Security Testing and Validation

Through extensive testing at CyberMedics, we’ve discovered that traditional security validation methods often fall short when applied to AI systems. Recent data shows that 58% of organizations express concerns about incorrect or biased AI outputs, highlighting the need for specialized testing approaches.

Penetration Testing Methods

AI-specific penetration testing requires a distinct methodology that addresses unique vulnerabilities. Primarily, when scoping AI penetration tests, organizations must evaluate both the AI components and their integration with existing infrastructure. At CyberMedics, we focus on three critical areas:

- Training data evaluation and potential leakage risks

- Model-specific weakness exploitation

- Robustness against targeted attacks

For organizations using APIs from established LLM providers, security testing should focus on configuration validation and unauthorized access prevention. Hence, our testing protocols align with emerging frameworks like OWASP (https://owasp.org )Top 10 for Large Language Model Applications and MITRE ATLAS. https://atlas.mitre.org/

Vulnerability Scanning Tools

Modern vulnerability scanning demands sophisticated tools capable of detecting AI-specific threats. Through our work across healthcare and government sectors, we’ve observed that effective scanning tools must provide comprehensive coverage of both traditional and AI-specific vulnerabilities.

Binary Analysis Approach offers superior accuracy compared to source code scanning, as it examines the entire application stack. Accordingly, this method proves particularly valuable when dealing with proprietary AI systems where source code access might be limited.

In addition to conventional scanning, AI-specific tools must detect:

- Prompt injection vulnerabilities

- Insecure output handling

- Model theft attempts

- Excessive agency issues

Performance Metrics

Measuring AI security effectiveness requires specific performance indicators. Thus, at CyberMedics, we’ve developed a comprehensive metrics framework based on industry standards. Key performance indicators include:

Technical Metrics:

- Mean Time to Detect (MTID): Aiming for 10-second detection time

- Mean Time to Respond (MTTR): Target of 10-minute response time

- False Positive and True Positive Rates

- System Logging Coverage

Our experience shows that effective metrics must consider both technical and operational aspects. Evidently, organizations need to assess external validity of measurements, recognizing that metrics taken in one context might not generalize to others.

For AI model validation, we employ specialized metrics such as:

- Mahalanobis distance for distribution analysis https://en.wikipedia.org/wiki/Mahalanobis_distance

- Precision and recall measurements for accuracy assessment

- Word Error Rate (WER) for speech recognition systems

Regular testing against these metrics helps identify potential vulnerabilities before they can be exploited. Ultimately, our testing protocols incorporate continuous monitoring and validation to ensure AI systems maintain their security posture over time.

Creating an AI Security Response Plan

Establishing an effective AI security incident response plan stands as a critical defense against evolving threats. Based on my experience leading CyberMedics’ cybersecurity initiatives, I’ve observed that AI incidents require distinct response strategies compared to traditional security breaches.

Incident Response Steps

At CyberMedics, we align our incident response framework with NIST’s proven methodology, which encompasses four crucial phases:

Detection and Assessment Phase Organizations must implement robust monitoring systems to identify AI-specific anomalies. Primarily, this involves setting up appeal and override capabilities, allowing users to report issues through feedback forms, emails, or dedicated hotlines.

Containment Strategy Short-term containment remains paramount in limiting incident impact. Ultimately, the containment plan should address:

- System modifications to reduce harmful impacts

- Understanding downstream dependencies

- Documenting all containment actions

Investigation and Root Cause Analysis Through our work at CyberMedics, we’ve found that identifying the cause of AI incidents often proves more complex than traditional security breaches. Meanwhile, organizations must preserve evidence for potential legal actions while conducting thorough forensic analysis.

Recovery and Implementation The recovery phase focuses on three main approaches:

- Pre-processing adjustments before model training

- In-processing modifications to model architecture

- Post-processing changes to output filtering

Team Responsibilities

Successfully managing AI security incidents demands a well-structured team with clearly defined roles. The Incident Response Director serves as the central coordinator, overseeing all response efforts. At CyberMedics, we’ve implemented a tiered response structure:

Tier 1: Alert Handlers – These team members monitor security tools and perform initial incident triage. Nonetheless, they focus on prioritizing alerts based on potential impact.

Tier 2: Incident Responders – These specialists conduct deeper analysis of identified incidents. In contrast to Tier 1, they possess advanced skills in complex investigations and familiar with normal network processes.

Tier 3: Field Experts – These specialists focus on specific domains within AI security. They often lead proactive threat hunting initiatives and specialized investigations.

Tier 4: Security Operations Center (SOC) Manager – The SOC manager oversees daily operations and ensures alignment with broader security strategies. Their responsibilities include:

- Coordinating training sessions

- Managing team resources

- Maintaining incident response documentation

The Computer Security Incident Response Team (CSIRT) should include representatives from various departments, including IT, legal, risk management, data science, and public relations.

Regular testing and updates of the incident response plan are essential. Organizations should conduct post-incident reviews to identify strengths and areas for improvement. At CyberMedics, we emphasize maintaining detailed records of incidents and response measures, which prove invaluable for future reference and compliance requirements.

Conclusion

Throughout my years leading CyberMedics, experience has repeatedly shown that AI security demands a comprehensive, proactive approach. Organizations face unprecedented challenges protecting their AI systems, from data poisoning attempts to sophisticated model attacks. These threats demand robust security architectures, stringent data protection protocols, and comprehensive monitoring systems.

My team and I have witnessed firsthand how proper security measures significantly reduce breach risks while ensuring AI systems deliver reliable, unbiased results. Successful AI security implementation requires strong foundations: encrypted data handling, continuous monitoring, regular audits, and well-defined incident response procedures.

Looking ahead, AI security threats will become increasingly sophisticated. Organizations must stay vigilant, regularly updating their security measures while maintaining strict compliance with evolving regulations.

AI Threats Are Evolving—Is Your Security Ready?

Don’t let AI-powered cyberattacks compromise your business. Strengthen your security posture with CyberMedics’ expertise. Get started today.

Remember, protecting your AI systems isn’t just about implementing security measures – it’s about building a security-first culture that anticipates and prevents threats before they materialize. Your organization’s data security depends on making this commitment.

FAQs

Q1. What are the primary security risks associated with AI systems?

The main security risks include data poisoning, adversarial attacks, model theft, and biased outputs. Organizations must implement robust security measures to protect sensitive data and ensure AI models operate as intended.

Q2. How can businesses protect their data when implementing AI solutions?

Businesses can protect their data by implementing end-to-end encryption, adopting a zero-trust approach, using fine-grained access controls, and regularly auditing security protocols. It’s also crucial to collect only necessary data and maintain its quality to reduce privacy risks.

Q3. What role does incident response play in AI security?

An effective incident response plan is critical for addressing AI security breaches. It should include steps for detection, containment, investigation, and recovery. Having a well-structured team with clearly defined roles is essential for managing AI security incidents efficiently.

Q4. How can organizations ensure their AI models are secure and unbiased?

Organizations can secure their AI models by implementing robust training data protection, continuous monitoring tools, and regular security testing. To address bias, they should use diverse and representative datasets, conduct thorough audits, and implement privacy-by-design principles.

Q5. What are the potential consequences of AI security breaches for businesses?

AI security breaches can lead to significant financial losses, with the average cost of a data breach reaching $4.88 million. They can also result in reputational damage, legal consequences, and loss of customer trust. Additionally, compromised AI systems may produce biased or incorrect outputs, potentially leading to discriminatory practices or poor decision-making.