How Unauthorized Shadow AI Creates Enterprise Security Holes

A staggering 74% of employees are using ChatGPT and other AI tools through non-corporate accounts, creating a shadow AI crisis that threatens enterprise security. As the CEO of CyberMedics, I’ve witnessed firsthand how this unauthorized AI usage has evolved into a critical security concern. In fact, the recent data shows that 27.4% of data inputted into these AI tools is considered sensitive – a concerning jump from 10.7% last year.

The consequences of this shadow data problem are severe. Organizations experiencing breaches involving unauthorized data sources take 291 days to identify and contain these incidents, consequently facing average costs of $4.88 million. These numbers paint a clear picture of the growing security challenges that businesses face when employees bypass official channels for AI tool usage.

In this comprehensive analysis, we’ll examine the security holes created by shadow AI, explore detection strategies, and outline practical governance solutions to protect your organization’s sensitive information.

Understanding Shadow AI Risk Landscape

The rapid adoption of AI tools has created an unprecedented security challenge in enterprise environments. Research shows that 73.8% of ChatGPT accounts used in workplaces are non-corporate ones, lacking essential security controls. Moreover, this pattern extends to other AI platforms, with personal account usage reaching 94.4% for Gemini and 95.9% for Bard.

What Makes AI Tools Go Shadow?

The accessibility of free and low-cost AI tools has primarily driven the shadow AI phenomenon. Additionally, employees face competitive pressure to enhance productivity, while recent graduates bring their AI tool familiarity from academic settings into the workplace.

Common Entry Points for Unauthorized AI

Through our cybersecurity assessments, we’ve identified the most common pathways for shadow AI infiltration:

- Customer support data (16.3% of sensitive data exposure)

- Source code (12.7% of unauthorized sharing)

- R&D materials (10.8% of sensitive information)

- Marketing materials and internal communications (6.6% each)

- HR records (3.9% of sensitive data exposure)

Current State of Enterprise AI Security

The enterprise AI security landscape presents a complex challenge. Furthermore, while 80% of Fortune 500 companies now have teams using corporate ChatGPT accounts, the adoption of secure enterprise versions isn’t keeping pace with employee usage. Recent security audits show that roughly half of source code (50.8%), research and development materials (55.3%), and HR records (49%) are being exposed through non-corporate AI accounts.

Real Impact of Shadow AI on Security

The latest security studies reveal an alarming trend in sensitive data exposure through shadow AI tools. The percentage of sensitive information fed into AI systems has increased 156% in only a year, and that trend is increasing.

Data Exposure Statistics 2024

The types of sensitive data exposed through shadow AI channels present a particularly concerning pattern:

- Customer support information (16.3% of total sensitive data)

- Source code repositories (12.7%)

- Research and development materials (10.8%)

- Marketing materials and internal communications (6.6% each)

- HR and employee records (3.9%)

- Financial documents (2.3%)

Subsequently, studies show that legal documents, although comprising only 2.4% of sensitive corporate data exposure, face the highest risk with 82.8% being processed through unauthorized AI accounts.

Cost of Shadow AI Breaches

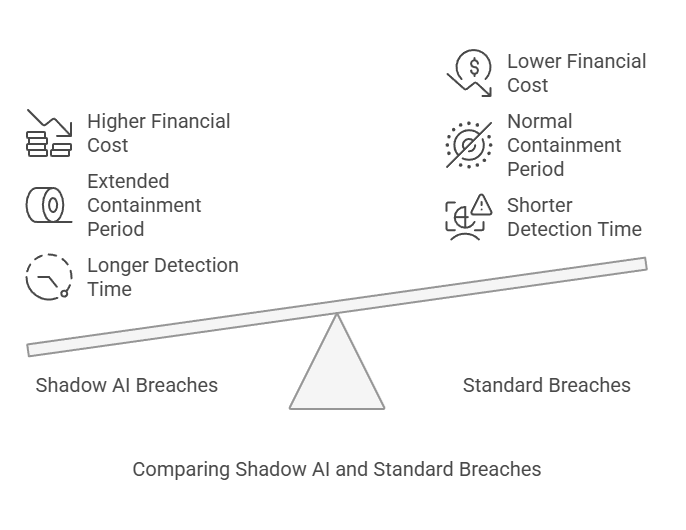

The financial ramifications of shadow AI breaches are substantial. Breaches involving shadow data now cost organizations an average of $4.88 million. Notably, these incidents take 26.2% longer to identify and 20.2% longer to contain compared to standard breaches.

As a result of these extended detection periods, the average lifecycle of a shadow AI breach extends to 291 days. This prolonged exposure particularly affects data stored across multiple environments, with breached data in public clouds incurring costs up to $5.17 million.

The impact extends beyond immediate financial losses. Research indicates that 75% of the increase in average breach costs stems from lost business and post-breach response activities. Furthermore, intellectual property theft has risen by 26.5%, with the cost per stolen record increasing from $156 to $173 in 2024 – an 11% uptick.

At CyberMedics, we’ve observed that organizations storing data across multiple environments face heightened risks, with 40% of breaches involving data distributed across various platforms. This fragmentation of data storage particularly complicates breach detection and containment efforts, ultimately leading to increased financial impact and reputational damage.

Technical Vulnerabilities Created

Shadow AI tools create technical vulnerabilities that extend far beyond simple data exposure. Through our extensive security audits at CyberMedics, we’ve identified three critical areas where unauthorized AI usage compromises enterprise security infrastructure.

API Security Gaps

Undocumented APIs, often called shadow APIs, present a significant security challenge. Indeed, these interfaces frequently operate without proper security controls or monitoring. Our analysis reveals that APIs form critical connections between AI systems and other software, making them prime targets for attackers seeking unauthorized access.

Rather than following standard security protocols, shadow APIs typically bypass essential safeguards. Primarily, these vulnerabilities manifest through weak authentication mechanisms, insecure endpoints, and susceptibility to input manipulation attacks. Therefore, organizations must implement comprehensive API security measures, including strong authentication, input validation, and continuous monitoring protocols.

Authentication Bypass Methods

The emergence of sophisticated authentication bypass techniques poses a growing threat. Our security team has identified several prevalent methods attackers use to circumvent security measures:

- Token theft through session cookie capture

- MFA fatigue attacks using repeated authentication requests

- Adversary-in-the-middle attacks that intercept communication

- Brute force attempts against weak authentication systems

Essentially, these bypass methods have become more sophisticated with AI integration. Our research shows that attackers now leverage AI-powered tools to automate the discovery of complex vulnerabilities. Furthermore, these tools can optimize phishing campaigns and mimic human behavior to bypass traditional security measures.

Data Pipeline Weaknesses

Data pipeline vulnerabilities present another critical concern in shadow AI implementations. Our investigations show that expanding data volumes often exceed existing infrastructure capabilities. Essentially, these pipelines face significant challenges in maintaining data integrity while processing increasing amounts of information.

The primary weaknesses we’ve identified include:

- Inadequate validation processes for AI training data

- Insufficient encryption for data in transit and at rest

- Limited anomaly detection capabilities

- Compromised data integrity during transformation processes

Overall, organizations storing data across multiple environments face heightened risks. Our analysis indicates that flaws in data pipeline integrity can severely impact strategic planning and decision-making processes. Nevertheless, implementing comprehensive data validation protocols and robust encryption mechanisms can significantly mitigate these risks.

Detection and Monitoring Strategy

Detecting unauthorized AI usage requires sophisticated monitoring capabilities. At CyberMedics, we’ve developed a multi-layered approach that combines network analysis with advanced behavioral monitoring to identify shadow AI activities.

Network Traffic Analysis

Primarily, our security teams use AI-powered analytics to automatically detect and analyze potential threats in network traffic. This approach focuses on identifying unusual patterns that signal unauthorized AI tool usage. Accordingly, our systems analyze terabytes of daily network log data to spot anomalies in real-time.

User Behavior Monitoring

User Entity Behavior Analytics (UEBA) forms the cornerstone of our shadow AI detection strategy. Generally, UEBA systems gather comprehensive data, including user activities, network traffic, and access logs to establish a baseline of ‘normal’ behavior. This baseline helps identify potential security threats through:

- Advanced threat detection that spots abnormal behavior or anomalies in user activities

- Data loss prevention monitoring that detects unusual access patterns or data transfers

- Automated response and remediation integration with other security tools

Our UEBA implementation continuously adapts based on changes in overall user patterns to fine-tune alert thresholds. For employees performing similar work, it creates peer-group comparisons, enabling detection of anomalies relative to colleagues. Undoubtedly, this approach has proven effective in identifying shadow AI usage patterns.

Through our monitoring systems, security teams receive detailed context and user activity records crucial for rapid incident response. The integration of UEBA with Extended Detection and Response (XDR) provides deeper insights across various data sources like networks, endpoints, and clouds. This comprehensive visibility ensures that no unauthorized AI activity goes undetected.

Building Effective AI Governance

Establishing robust AI governance requires a structured approach that extends beyond basic security measures. At CyberMedics, we’ve witnessed how proper governance frameworks significantly reduce the risk of shadow AI incidents.

Policy Framework Development

First and foremost, an effective AI governance policy must address human-centric design and oversight. Given these points, our framework emphasizes transparency in AI operations and clear accountability for AI-driven decisions. Above all, the governance structure should include three key committees:

- A Steering Committee comprising senior stakeholders from compliance, IT, risk management, legal, and business units

- A Risk and Compliance Committee focusing on regulatory adherence and operational risk

- A Technical Review Committee including experts in enterprise architecture, AI, data science, and cybersecurity

Organizations implementing dynamic governance models show 18.6% better security outcomes. Primarily, this success stems from their ability to adapt quickly to emerging threats while maintaining robust oversight of AI operations.

Employee Training Programs

The cornerstone of effective AI governance lies in comprehensive employee education. Organizations with well-structured AI training programs experience 74% higher compliance rates. Coupled with role-specific training modules, these programs should cover:

- Legal and ethical AI usage guidelines

- Data privacy and security protocols

- Risk management fundamentals

- Secure AI implementation practices

In similar fashion to our cybersecurity training approach at CyberMedics, we’ve developed specialized AI security courses for different organizational roles. Important to realize, this targeted training has proven particularly effective, with business leaders receiving advanced modules on strategic decision-making and risk assessment.

Compliance Requirements

The regulatory landscape for AI governance continues to evolve rapidly. The EU AI Act, implemented in 2024, stands as the world’s first comprehensive regulatory framework for AI. Under these regulations, organizations face penalties ranging from EUR 7.5 million to EUR 35 million, or 1.5% to 7% of worldwide annual turnover, depending on the type of noncompliance.

To maintain compliance, organizations must implement:

- Company-wide model risk management initiatives

- Comprehensive inventory of AI models

- Regular peer reviews for high-risk AI solutions

- Human intervention failsafe mechanisms

Organizations implementing these compliance measures show a 40% reduction in AI-related security incidents. Furthermore, proactive compliance strategies help organizations identify and address potential security gaps 26% faster than reactive approaches. https://www.ibm.com/think/insights/ai-compliance

Conclusion

As AI adoption accelerates, organizations lacking effective governance and security measures face increased risks. Companies leveraging security AI and automation detect and contain breaches nearly 100 days faster than those without such capabilities

With AI’s growing role in enterprise environments, organizations must act decisively to balance innovation with security. Investing in robust AI governance and security infrastructure will not only protect sensitive enterprise data but also reduce breach costs and enhance overall cyber resilience.

Our team at CyberMedics has identified three essential steps for protecting enterprise data:

- Establishing clear AI usage policies backed by employee training

- Implementing advanced detection systems for unauthorized AI activity

- Creating structured governance frameworks that adapt to emerging threats

The stakes continue rising as AI tools become more sophisticated. Each passing month brings new attack vectors and increased risk exposure. Don’t let AI-powered cyberattacks compromise your business. Strengthen your security posture with CyberMedics’ expertise. Get started today.

FAQs

Q1. What is shadow AI and why is it a security concern for enterprises?

Shadow AI refers to the unauthorized use of AI tools by employees through non-corporate accounts. It’s a major security concern because it can lead to sensitive data exposure, creating vulnerabilities that may result in costly data breaches and security incidents.

Q2. How prevalent is the use of unauthorized AI tools in the workplace?

According to recent data, about 74% of employees are using AI tools like ChatGPT through non-corporate accounts. This widespread use of unauthorized AI creates significant security risks for enterprises.

Q3. What types of sensitive information are most commonly exposed through shadow AI?

The most commonly exposed sensitive information through shadow AI includes customer support data, source code, R&D materials, marketing materials, internal communications, and HR records. Legal documents, while comprising a smaller percentage, face the highest risk of exposure.

Q4. How can organizations detect unauthorized AI usage?

Organizations can detect unauthorized AI usage through sophisticated monitoring strategies, including network traffic analysis and user behavior monitoring. Advanced analytics, machine learning algorithms, and User Entity Behavior Analytics (UEBA) are effective tools for identifying shadow AI activities.

Q5. What steps can companies take to mitigate the risks associated with shadow AI?

To mitigate shadow AI risks, companies should establish clear AI usage policies, implement comprehensive employee training programs, develop robust AI governance frameworks, and deploy advanced detection systems for unauthorized AI activity. Additionally, staying compliant with evolving AI regulations is crucial for risk management.